TLDR: There’s going to be a shit ton of temporarily useful content; businesses need to have a quick way to make the worthwhile content stand out. (Thanks Joseph)

There are two ideas I have been thinking about over the past few months that I want to document here. The first has to do with disruption, and the second has to do with novelty vs usefulness.

The late Clay Christensen pointed out that major technology shifts take place when there is a massive change in price or available quantity of something. When there was a 10x reduction in price for computers, the personal computing revolution took place. When price decreases, then the potential application for said technology significantly increase. When prices decrease and potential applications increase, then there the quantity of said technology also increases, and with it are new platforms for new tools and previously non-existent technologies.

I’ve heard this idea too many times to count, but something I never deeply thought about was reasons why certain ideas within the new platform do or don’t succeed. The specific area of interest for me is how the new-platform creates new opportunities that previously couldn’t exist – and therefore everything that wasn’t possible before is novel. When I use the term novel, I mean that since it is new, its significance seems highly valued relative to the past. That being said, if the new idea was to be projected out in the new reality, the novelty eventually wears off and is no longer as valuable as it once was.

Another way to think of it is the bundling and unbundling effect which I believe comes from a American economist who studied the cross-country trucking industry, and the standardization of freight trucks. Sadly, I don’t know the original reference. The summary point is that when getting packages safely and cheaply across the country was difficult, the creation of an 18 wheeler semi-truck (actually, I’m pretty sure the first version was not an 18 wheeler, so I’m butchering the facts) was a new innovation that solved a large problem. Once the trailer hauling semi-truck was widely accepted as the best option for hauling freight, the machine parts were modularized so that the trucking company could delegate part production and reduce costs. At the same time, the process of modularizing the trucks allowed new entrants into the trucking space, which created differentiated offerings and options for achieving cross country freight hauling.

Once the hauling freight was predictable, then new industries that couldn’t exist before emerged. The trucking industry created new modes of consumption with federated infrastructure. The big box stores, like Walmart or Costco, that couldn’t exist before were now possible (or the early 20th century parallel). As a consequence of the new modes of freight shipping creating a new possible economic model, parking lot based stores formed and the urban landscape changed. I’m extrapolated here, but I imagine a number of non-existent suburban environments formed due to this single freight based innovation.

The most exciting part of this to me is how the previously nascent technologies around freight positively exploded as a result of the new advancements.

And inline the new urban landscape fostered smaller environments that created new markets of supply and demand that didn’t previously exist. I imagine this also put stresses on areas that never expected such high throughput. I’m sure new roads needed to be developed, new laws, and in the process more and more markets emerged.

I mention all of this because I find an interesting parallel when it comes to today and the state of what I’ve been thinking about in the proliferation of video content. In relation to traditional disruption theory, we had a major event that increased the quantity of video production, without necessarily changing the cost of production. Specifically, the cost of individual video creation has transformed the way we communicate personally – as seen through social media – but the impact on businesses has been delayed. Given that the cost of video production had been low for quite a while, the recent societal forces around Covid turned nearly every business into a video producer.

I think a good parallel is to think of Zoom as being the semi-truck and the need to interact with one another during a pandemic as being the freight to haul. Naturally, as Zoom became the fix-all solution, the tool that started as a necessity became fraught with problems for specific use cases. For one, Zoom wasn’t designed for highly interactive environments or planning events ahead of time. As a result, a world wind of new applications emerged to compliment the lacking areas, such as Slidio for questions or Luma for event planning.

The need for higher fidelity interactions as the new normal of interacting over video was no longer a novelty. For one, people wanted to have fun. Games became a common way to spend time together, but beyond actual video games, an entire category of Zoom based games emerged. Things like GatherTown or Pluto Video came to light. Similarly, as the interaction model of being online together and participating in a shared online experience was normalized, existing platforms like Figma were used for non-traditional purposes to created synchronous shared experiences.

I bring these examples up because I think the ones above are obviously novel ideas that won’t be valuable for extended periods of time. That being said, the seed of the novelty comes from a core experience that will likely reemerge in various places in the future.

Continuing with the Zoom thread, the explosion of video communication and alternative ways of communicating with video has created the explosion in video content that previously didn’t exist. Of course, there is the novelty around having this increased content – which I believe will only continue to increase. As many things, the transition from in-person to digital was a one-way door for many industries, where the reduction in cost and surprising resilience in results has created a new normal.

I won’t try an exhaustive analysis around video, but one area that is particularly interesting in the machine learning space. Given the past decade, the huge improvements around computer vision, deep learning, and speech-to-text based research have been expensive and the applications have been very specific.

There is an exciting cross section around the sheer quantity of content being produced, and the widely accessible machine learning APIs that make it possible to analyze the content in a way that wasn’t previously possible cheaply. The novelty effect here seems ripe for misapplication. Specifically, the thing that wasn’t generally possible and the thing that previously wasn’t widely present are converging at the same time.

More specifically, there is a ton of video content being produced by individuals and businesses. Naturally, given the circumstances, applying the new technology to the ever growing problem seems like a good idea. Creating tooling that helps organize the new video content, or improve the reflection and recall of the content seems valuable. But is it just a novelty or a future necessity. If its a necessity, will it be a commodity and common practice, or specialize enough to demand variety in offerings?

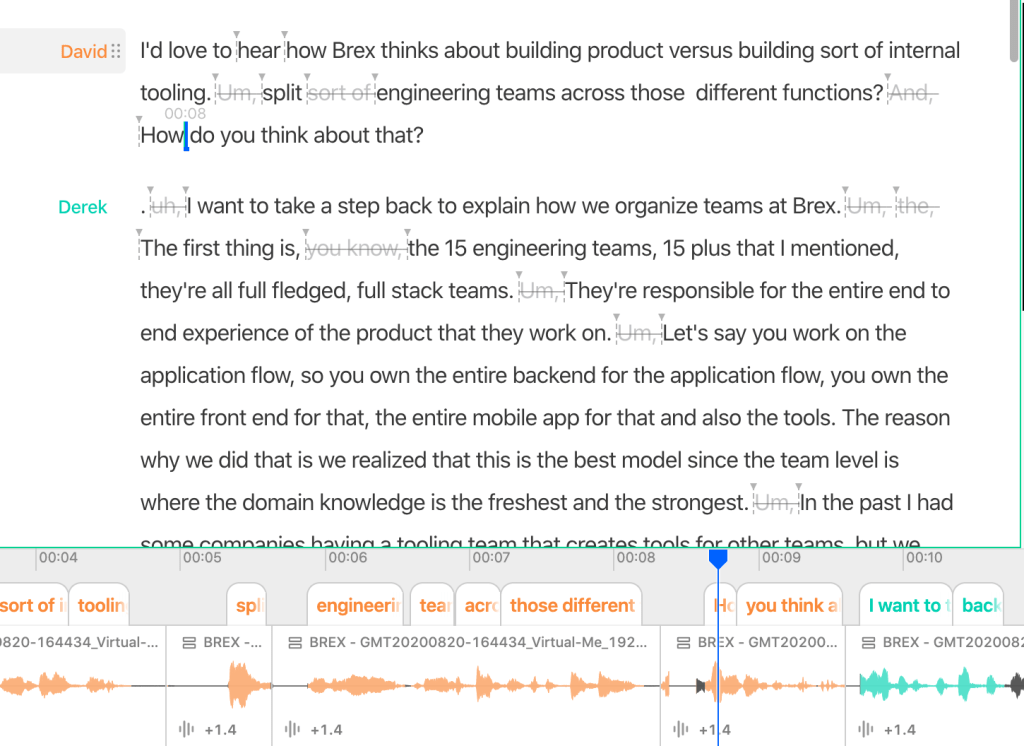

Applying speech-to-text processing on video content is cheap, so analyzing everything creates a new possibility where the previously non-machine readable media is now itself a new resource that didn’t previously exist. Not only do we have video that didn’t exist before, but we have a whole category of text content that was non-existent. At the basic level, we can search video. At the more advanced level, we can quantify qualities about the video at scale. The valuable applications for this have been around phone call analysis in the past, but now are applicable to the video calls of sales teams or user interviews at product teams.

Again, this being the case, I find it falls to the trap of being a novel and immediately useful solution, but far from a long term value. I imagine the construct to think about the new machine readable video, is that it unlocks a new form of organizing. The ability to organize content is valuable at the surface, but the content being organized needs to be worth the effort.

For one, when video is being produced at scale, in the way that it is today, the shelf life of content is quite low. If you record a call for a product interview today, then when that product changes next month, the call is no longer valuable. Or at least the value of the call declines proportionally to how much the product changes. Lets call this shelf life.

Interestingly, as individuals – in the social media space – the notion of shelf life was given a sexy term: ephemerality. Since there is no cost to produce content individually, the negatives of having content with a short shelf life outweigh the positives, and among many other tings, this became a positive differentiator.

For businesses though, the creation of media is often far from free. Not only is the time of employees valuable, but the investment behind certain types of content are not immediately returned. So while the short shelf life video is already widely in use, the question that comes to my mind is: what are the future industries that don’t have a near term end in the road in this new space?

My general take is that organizing content is a limited venture. Having immediate access to content is useful, but at some level a novelty that requires a long shelf life value. Going back to the trucking analogy, while trucking is a commodity, you have industries that were enabled by trucking, like the Walmarts – which are bound to emerge in this new Zoom based freight model. I imagine while the shuttling of goods for commerce is important in freight, that point of value in the new ecosystem is going to be around helping businesses increase the value of their already existing content.

Might I even say, helping businesses “milk” the value.