I was stuck on a problem that I wanted to write out. The problem I was trying to solve could be simplified to the following:

- I have a box in the browser with fixed dimensions.

- I have a large number of words, which vary in size, which will fill the box.

- If a full box was considered a “frame”, then I wanted to know how many frames I would have to use up all the words.

- Similarly, I needed to know which frame a word would be rendered in.

This process is simple if the nodes are all rendered on a page, because the dimensions of the words could be individually calculated. Once each word has a width/height, then its just a matter of deciding how many can fit in each row, until its filled, and also how many rows you can have before the box is filled.

I learned this problem is similar to the knapsack problem, bin/rectangle packing, or the computer science text-justification problem.

The hard part was deciding how to gather the words dimensions, considering the goal is to calculate the information before the content is rendered.

Surprisingly, due to my experience with fonts, I am quite suited to solving this problem – and I thought I would jot down notes for anyone else. When searching for the solution, I noticed a number of people in StackOverflow posts saying that this was a problem that could not be solved, for a variety of correct-sounding, but wrong, answers.

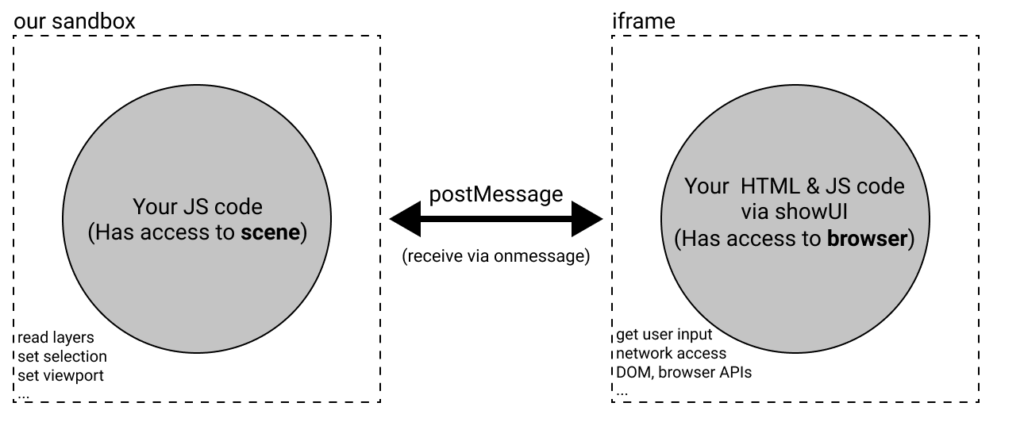

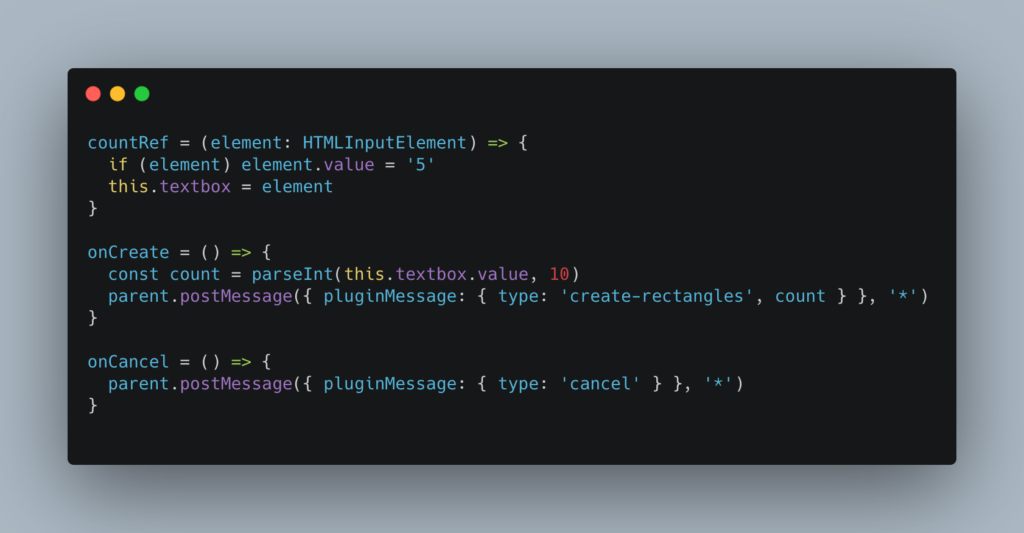

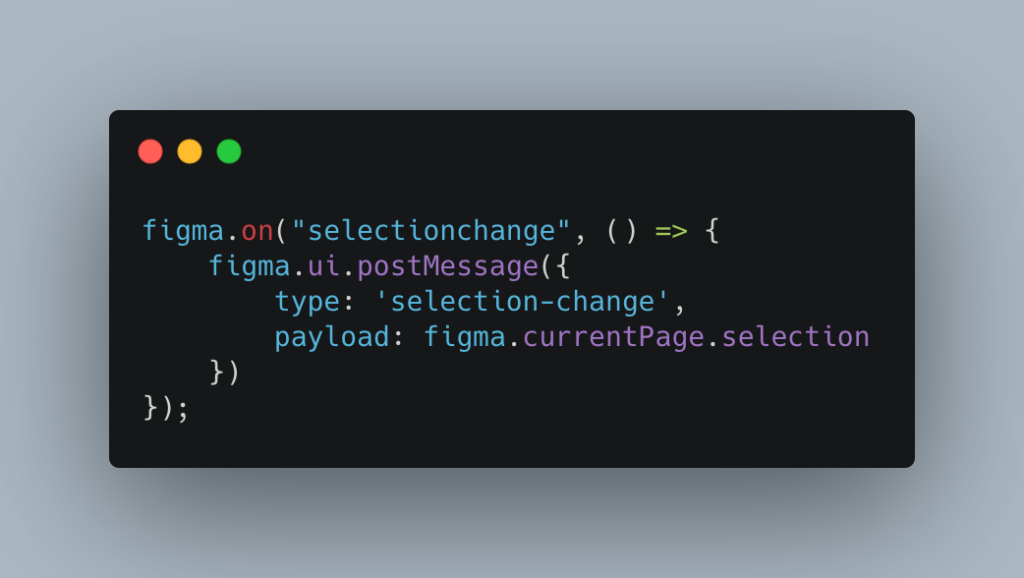

When it comes to text rendering in a browser, there are two main steps that take place, which can be emulated in JavaScript. The first is text shaping, and the second is layout.

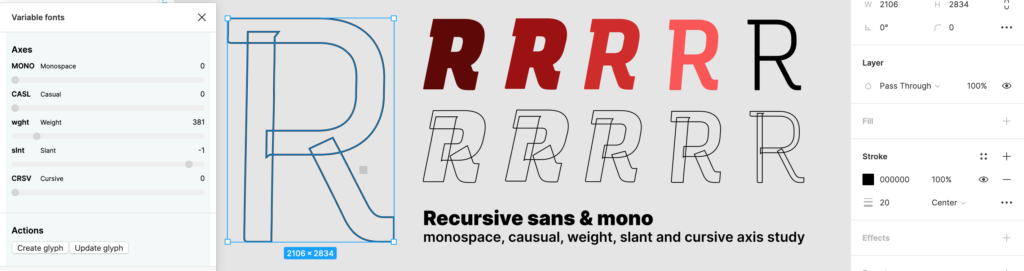

The modern ways of handling these are C++ libraries called Freetype and Harfbuzz. The two of these libraries combined will read a font file, render glyphs in a font, and then layout the rendered glyphs. While this sounds trivial, it’s important because behind-the-scenes a glyph is more or less a vector, which needs determine how it will be displayed on a screen. Also each glyph will be laid out depending on its usage context. It will render differently based on what characters its next to, where in a sentence or line it is location.

Theres a lot that can be said about the points above, which I am far from an expert on.

The key points to take away is that you can calculate the bounding box of a glyph/word/string given the font and the parameters for rendering the text.

I have to thank Rasmus Andersson for taking time to explain this to me.

Side note

Today, I had a problem that I couldn’t figure out for the life of me. It may have been repeated nights of not sleeping, but also it was a multiple layered problem that I intuitively understood. I just didn’t have a framework for breaking it apart and understanding how to approach it. In the broad attempt to see if I could get the internet’s help, I posted a tweet, with a Zoom link and called for help. Surprisingly, it was quite successful and over a two hour period, I was able to find a solution.

I’m genuinely impressed by the experience, and highly encourage others to do the same.

One more note, this is a great StackOverflow answer: https://stackoverflow.com/questions/43140096/reproduce-bounding-box-of-text-in-browsers