This is a follow up on my process of developing familiarity with computer vision and machine learning techniques. As a web developer (read as “rails developer”), I found this growing sphere exciting, but don’t work with these technologies on a day-to-day. This is month three of a two year journey to explore this field. If you haven’t read already, you can see Part 1 here: From webdev to computer vision and geo and Part 2 here: Two months exploring deep learning and computer vision.

Overall Thoughts

Rails developers are good at quickly building out web applications with very little effort. Between scaffolds, clear model-view-controller logic, and the plethora of ruby gems at your disposal, Rails applications with complex logic can be spun up in a short amount of time. For example, I wouldn’t blink at building something with creating an application that required user accounts, file uploads, and various feeds of data. I could even make it highly testable with great documentation. Between Devise, Carrierwave (or the many other file upload gems), Sidekiq, and all the other accessible gems, I would be up and running on Heroku within 15 minutes.

Now, add a computer vision or machine learning task and I would previously have no idea where to go. Even as I explore this space, I still struggle to find practical uses of the machine learning concepts (neural nets and deep learning) in practical applications. The most practical ideas are word association or image analysis. That being said, the interesting ideas (which I have yet to find practical applications for) are around trend detection and generative adversarial networks.

As a software engineer, I have found it hard to understand the practical values of machine learning in the applications I build. There is a lot of writing around models (in the machine learning sense, rather than the web application/database sense), neural net architecture, and research, but I haven’t seen as much around the practical applications for a web developer like my self. As a result, I decided to build out a small part of a project I’ve been thinking about for a while.

The project was meant to detect good graffiti on Instagram. The original idea was to use machine learning to quality what “good graffiti” looked like, and then run the machine learning model to detect and collect images. In concept the idea sounds great, but I have no idea how to “train a machine learning model”, and I have very little sense of where to start.

I started building out a simple part of the project with the understanding that I would need to “train” my “model” on good graffiti. I picked a few Instagram accounts of good graffiti artists, where I knew I could find high quality images. After crawling the Instagram accounts (which took much longer than expected due to Instagram’s API restrictions) and analyzing the pictures, I realized a big problem at hand. The selected accounts were great, but had many non-graffiti images, mainly of people. To get the “good graffiti” images, I was first going to need to filter out the images of people.

By reviewing the pictures, I found that as much as four out of every ten images was of a person or had a person in it. As a result, before even starting the task of “training” a “good graffiti” “model”, I needed to just get a set of pictures that didn’t contain any people.

(Side note for non-machine learning people: I’m using quotations around certain words because you and I probably have an equal understanding of what those words actually mean.)

Rather than having a complicated machine learning application that did some complicated neural network-deep learning-artificial intelligence-stochastic gradient descent-linear regression-bayesian machine learning magic, I decided to simplify the project into building something that detected humans in a picture and flagged them. I realized that many examples of machine learning tutorials I had read before showed how to do this, so it was a matter of making those tutorials actually useful.

—

The application (with links to code)

I was using Ruby on Rails for the web applications that managed the database and rendered content. I did most of the image crawling of Instagram using Ruby, via a Redis library called Sidekiq. This makes running delayed tasks easy.

For the machine learning logic, I had a code example for object detection, using OpenCV, from a PyImageSearch.com tutorial. The code example was not complete, in that it detected one of 30 different items in the trained image model, one of them being people, and drew a box around the detected object. In my case, I slightly modified the example and placed it inside a simple web application based on Flask.

I made a Flask application with an endpoint that accepted a JSON blob with an image URL. The application downloaded the image URL and processed it through the code example that drew a bounding box around the detected object. I only cared about the code example detecting people, so I created a basic condition to give a certain response for detecting a person and a generic response for everything else.

This simple endpoint was the machine learning magic at work. Sadly, it was also the first time I’d seen a practical usable example of how the complicated machine learning “stuff” integrates with the rest of a web application.

For those who are interested, the code for these are below.

—

Concluding Realizations

I was surprised that I hadn’t seen a simple Flask based implementation of a deep neural network before. I also feel like based on this implementation, when training a model isn’t involved, applying machine learning into any application is just like having a library with a useful function. I’m assuming that in the future, the separation of the model and the libraries for utilizing the models will be simplified, similar to how a library is “imported” or added using a bundler. My guess is some of these tools exist, but I am not deep enough yet to know about them.

Through reviewing how to access the object detection logic, I found a few services that seemed relevant, but eventually were not quite what I needed. Specifically, there is a tool called Tensorflow Serving, which seems like it should be a simple web server for Tensorflow, but isn’t quite simple enough. It possibly is what I need, but the idea of having a server or web application that solely runs Tensorflow is quite difficult to setup.

Web service based machine learning

A lot of the machine learning examples that I find online are very self-encompassed examples. The examples start with the problem, then provide the code to run the example locally. Often the image is an input provided by file path via command line interface, and the output is a python generated window that displays a manipulated image. This isn’t very useful as a web application, so making a REST endpoint seems like a basic next step.

Building the machine learning logic into a REST endpoint is not hard, but there are some considerations worth making. In my case, the server was running on a desktop computer with enough CPU and memory to process requests quickly. This might not always be the case, so a future endpoint might need to run tasks asynchronously using something like Redis. A HTTP request here would most likely hang and possibly timeout, so some basic micro-service logic would need to be considered for slow queries.

Binary expectations and machine learning brands

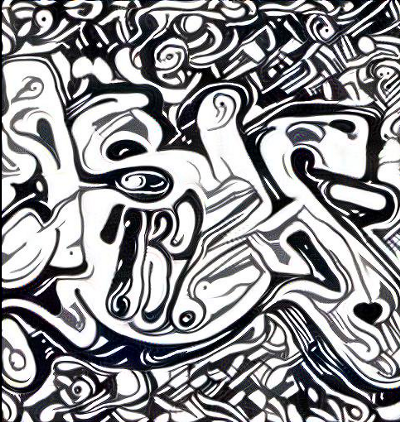

A big problem with the final application was that processed graffiti images were sometimes falsely flagged as people. When the painting contained features that looked like a person, such as a face or body, the object classifier was falsely flagging the paintings. Oppositely, there were times when pictures of people were not properly flagging the images of people.

Web applications require binary conclusions to take action. A image classifier will provide a percentage rating regarding whether or not the object detected is present. In larger object detection models, the classifier will have more than one object being recommended as being potentially detected. For example, there is a 90% chance of a person being in the photo, a 76% chance of a airplane, and a 43% chance of a giant banana. This isn’t very useful when the application processing the responses just needs to know whether or not something is present.

This brings up the importance of quality in any machine learning based process. Given that very few object classifiers or image based processes are 100% correct, the quality of an API is hard to gauge. When it comes to commercial implementations of these object classifier APIs, the brands of services will be largely impacted by the edge cases of a few requests. Because machine learning itself is so opaque, the brands of the service providers will be all the more important in determining how trustworthy these services are.

Oppositely, because the quality of a machine learning tasks vary so greatly, a brand may struggle showcasing its value to a user. When the binary quality of solving a machine learning task is pegged to a dollar amount, for example per API request, the ability to do something for free will be appealing. From the perspective of price, rolling your own free object classifier will be better than using a third-party service. The branded machine learning service market still has a long way to go before becoming clearly preferred over self-hosted implementations.

Specificity in object classification is very important

Finally, when it comes to any machine learning task, specificity is your friend. Specifically, when it comes to graffiti, its hard to qualify something that varies in form. Graffiti itself is a category that encompasses a huge range of visual compositions. Even a person may struggle to qualify what is or isn’t graffiti. When compared to detecting a face or a fruit, the specificity of the category is important.

The brilliance of WordNet and ImageNet are the strength of categorical specificities. By classifying the world through words and their relationships to one another, there is a way to qualify similarities and differences of images. For example, a pigeon is a type of bird, but different from a hawk. All the while, its completely different from an airplane or bee. The relationship between those things allow for clearly classifying what they would be. No such specificity exists in graffiti, but is needed to properly improve an object classifier.

Final final

Overall, the application works and was very helpful. Making this removed more of the mystery around how machine learning and image recognition services work. As I noted above, this process also made me much more aware of the shortfalls of these services and the places where this field is not yet defined. I definitely think this is something that all software engineers should learn how to do. Before the tools available become simple to use, I imagine there will be a good period of a complicated ecosystem to navigate. Similar to the browser wars before web standards were formed, there is going to be a lot of vying for market share amongst the machine learning providers. You can already see it between services from the larger companies like Amazon, Google and Apple. At the hardware and software level, this is also very apparent between Nvidia’s CUDA and AMD’s price appeal.

More to come!